I am now working on the research of deep learning and large language model adaption to tabular data fields. If you are seeking any form of academic discussion or cooperation, please feel free to email me at jyansir@zju.edu.cn.

I graduated from Chu Kochen Honors College, Zhejiang University (浙江大学竺可桢学院) with a bachelor’s degree and am a full-time PhD student in the College of Computer Science and Technology, Zhejiang University (浙江大学计算机科学与技术学院), advised by Jian Wu (吴健). I also collaborate with the assistant professor Jintai Chen (陈晋泰) from the Hong Kong University of Science and Technology (HKUST) closely.

My research interest includes foundation model, relational deep learning and neural architecture engineering for tabular data or multi-table prediction.

I am also an amateur photographer and ACG enthusiast, and very willing to join relevant offline activities in my spare time.

📝 Publications

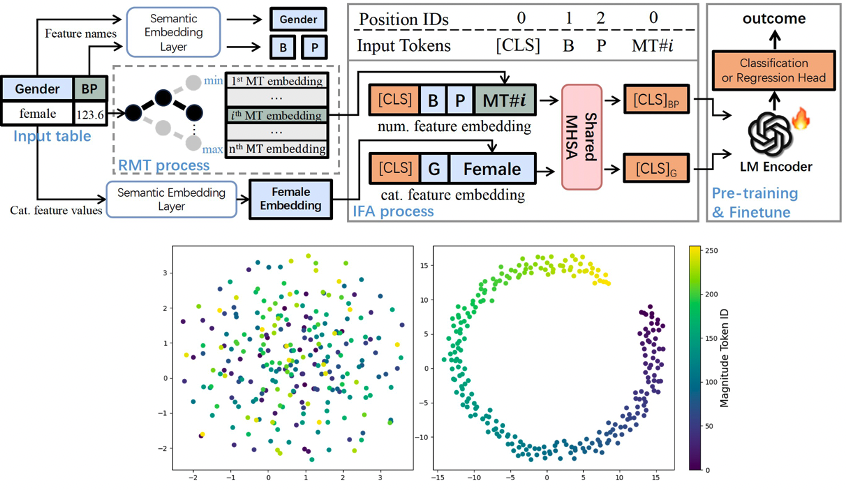

Making Pre-trained Language Models Great on Tabular Prediction

Jiahuan Yan, Bo Zheng, Hongxia Xu, Yiheng Zhu, Danny Z. Chen, Jimeng Sun, Jian Wu, Jintai Chen, (SpotLight, Notable Top 5%)

- TL;DR: This work proposed relative magnitude tokenization, a distributed numerical feature embedding technique, and intra-feature attention, a reasonably contextualized mechanism, both for tabular feature adaption to the modern Transformer-based LM architecture.

- Academic Impact: The resulting pre-trained LM TP-BERTa surpasses non-LM baselines on 145 downstream tabular prediction datasets, pivot analysis exhibits further significant improvement when the discrete feature dominates. This work is included by many tabular LLM paper repos, such as Awesome-LLM-Tabular

and promoted by known media, such as 新智元.

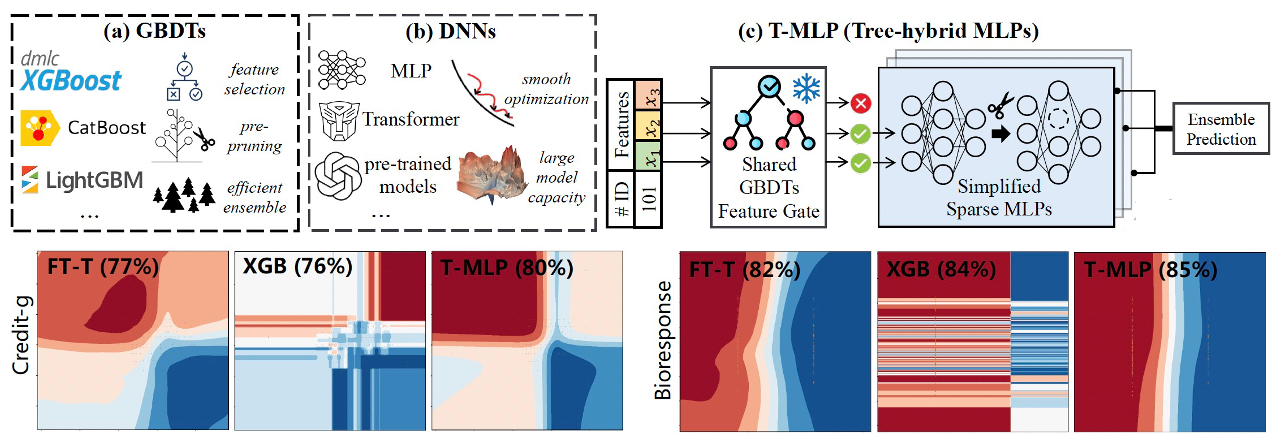

Team up GBDTs and DNNs: Advancing Efficient and Effective Tabular Prediction with Tree-hybrid MLPs

Jiahuan Yan, Jintai Chen, Qianxing Wang, Danny Z Chen, Jian Wu, (Oral)

- TL;DR: This work explored a GBDT-DNN hybrid framework to address the model selection dilemma in tabular prediction and introduced T-MLP, a tree-hybrid MLP architecture combining the strengths of both GBDTs and DNNs. T-MLP benefits from using DNN capacity to emulate GBDT development process, i.e., entropy-driven feature gate, tree pruning and model ensemble. Experiments on 88 datasets from 4 benchmarks (covering DNN- and GBDT-favored ones) show that T-MLP is competitive with extensively tuned SOTA DNNs and GBDTs, all achieved with pre-fixed hyperparameters, compact model size and reduced training duration.

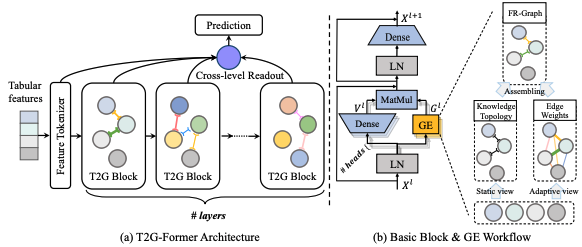

Jiahuan Yan, Jintai Chen, Yixuan Wu, Danny Z. Chen, Jian Wu, (Oral, Top 20%)

- TL;DR: This work introduced T2G-Former architecture, a feature relation (FR) graph guided Transformer for selective feature interaction. For each basic T2G block, a Graph Estimator automatically organize FR graph in a data-driven manner to guide feature fusion and alleviate noisy signals, making feature interaction sparse and interpretable. The tuned model outperforms various DNNs and is comparable with XGBoost.

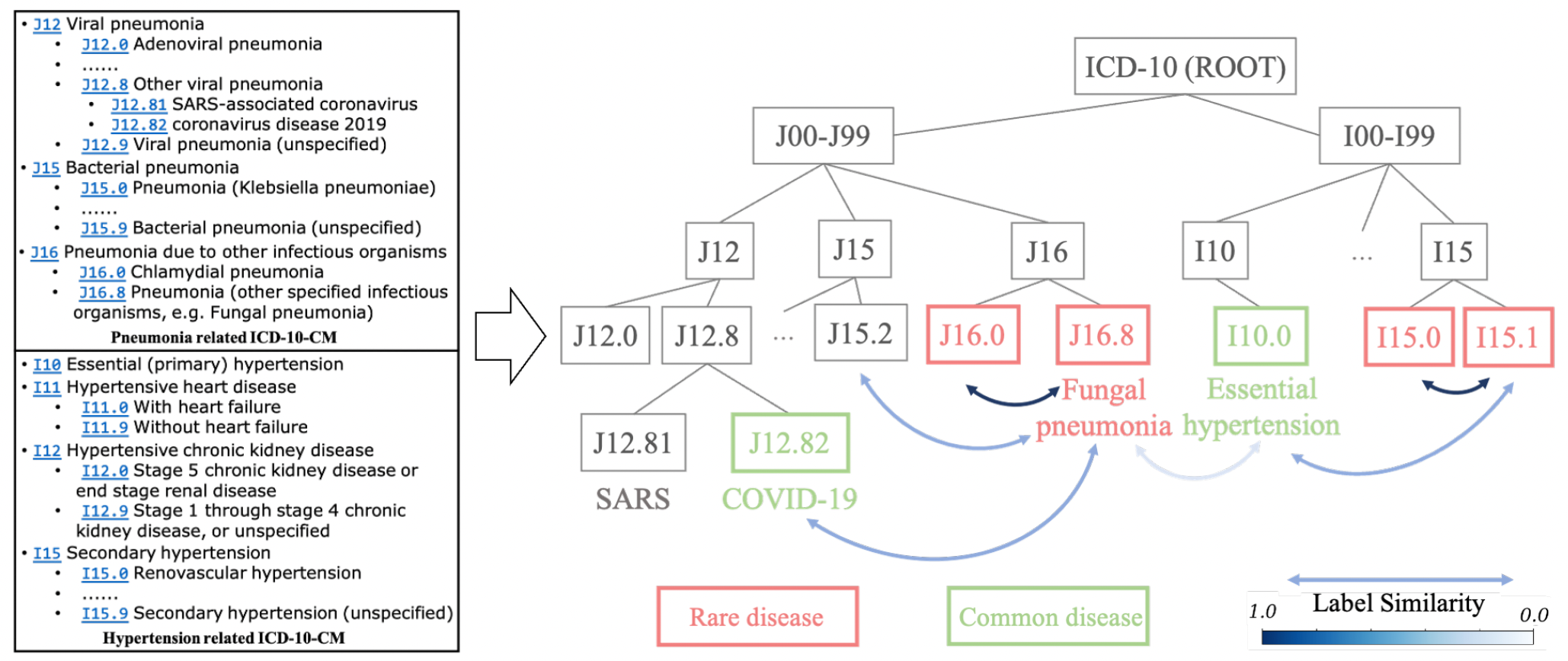

Jiahuan Yan, Haojun Gao, Zhang Kai, Weize Liu, Danny Z. Chen, Jian Wu, Jintai Chen, EMNLP-Findings

- TL;DR: This work proposed a Label Tree guided imbalanced text classification algorithm Text2Tree, including a cascade attention module for structure-based label embedding, similarity based surrogate learning (a generalized form of supervised contrastive learning) & dissimilarity based MixUp. Text2Tree outperforms in ICD coding and serves as a supplementary techniques for imbalanced classification scenarios.

Preprint 2024SERVAL: Synergy Learning between Vertical Models and LLMs towards Oracle-Level Zero-shot Medical Prediction, Jiahuan Yan, Jintai Chen, Chaowen Hu, Bo Zheng, Yaojun Hu, Jimeng Sun, Jian WuKDD 2024(Oral) Can a Deep Learning Model be a Sure Bet for Tabular Prediction?, Jintai Chen$^*$, Jiahuan Yan$^*$(equally contributed), Qiyuan Chen, Danny Z. Chen, Jian Wu | repo | Exclusive Code Contributor | This work is included by the popular tabular deep learning library PyTorch Frame.

IJCAI 2024Personalized Heart Disease Detection via ECG Digital Twin Generation, Yaojun Hu, Jintai Chen, Lianting Hu, Dantong Li, Jiahuan Yan, Haochao Ying, Huiying Liang, Jian WuACM-MM 2023(Oral) GCL: Gradient-Guided Contrastive Learning for Medical Image Segmentation with Multi-Perspective Meta Labels, Yixuan Wu, Jintai Chen, Jiahuan Yan, Yiheng Zhu, Danny Z Chen, Jian WuNeurIPS 2023Sample-efficient multi-objective molecular optimization with gflownets, Yiheng Zhu, Jialu Wu, Chaowen Hu, Jiahuan Yan, kim hsieh, Tingjun Hou, Jian WuJ-BHI 2023Polygonal Approximation Learning for Convex Object Segmentation in Biomedical Images with Bounding Box Supervision, Wenhao Zheng, Jintai Chen, Kai Zhang, Jiahuan Yan, Jinhong Wang, Yi Cheng, Bang Du, Danny Z Chen, Honghao Gao, Jian Wu, Hongxia Xu

🛠️ Open Source Projects

- Contributor to deep tabular model and data preprocessing modules for popular tabular deep learning library PyTorch Frame

(created by the PyG team).

(created by the PyG team).

🎖 Honors and Awards

- 2025.03, Excellent Doctoral Graduates of Zhejiang University (Top 1%).

- 2024.10, National Scholarship of China (Top 1%).

- 2021.10, Excellent Graduate Student Cadre of Zhejiang University (Top 1%).

🔎 Field Activities

- Reviewer for Conferences: ICML 2025, ICLR 2025, KDD 2025, AISTATS 2025, NeurIPS 2024, ACL 2024, KDD 2024, EMNLP 2024, IJCAI 2024, ACM MM 2024.

- Reviewer for Journals: TKDE 2025.

📖 Educations

- 2020.09 - now, PhD, Zhejiang University, Hangzhou.

- 2016.09 - 2020.06, Undergraduate, Chu Kochen Honors College, Zhejiang Univeristy, Hangzhou.